SAP Data and Analytics Advisory Methodology

Last week, SAP came up with a Data and Analytics Advisory Methodology. This is aimed at providing guidance in the design and validation of solution architectures for data-driven business innovations.

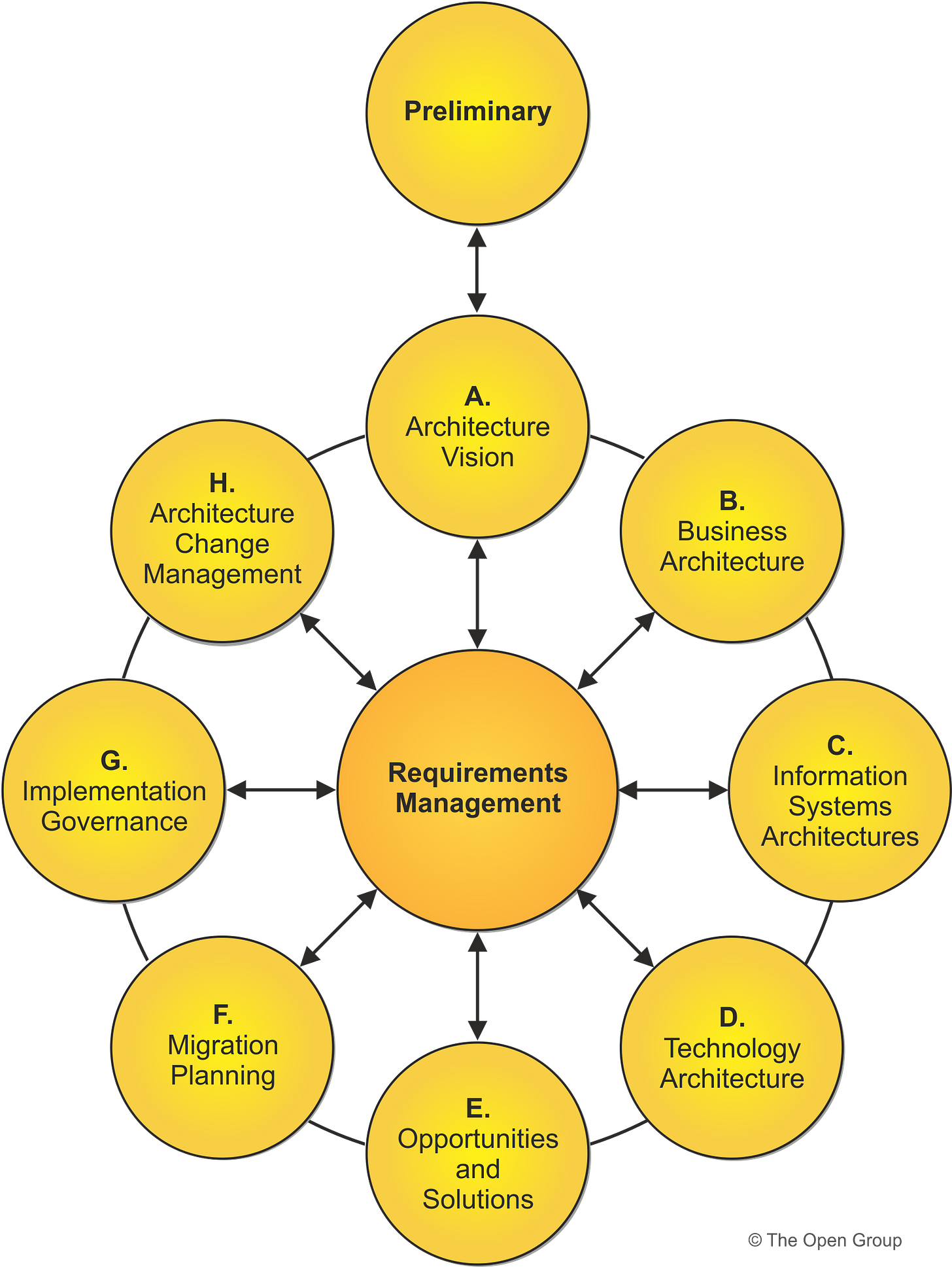

The architecture, as like it's older brethren SAP Integration Solution Advisory Methodology and SAP Application Extensibility Methodology is based on the classic TOGAF model and complements the other two in the bigger picture.

The model is accompanied by

data domain reference model to establish a common understanding of SAP data,

a data & analytics capability model to better support the architecture definition and

Data-to-value use case patterns and related reference architectures to accelerate solution architecture development.

Not just that it is accommodative to include new data and analytics capabilities into the framework including Open Data Ecosystem.

The core premise behind the model is the concept of Data-to-Value. This is represented as a Data Product which is a controlled dataset provided by a data domain that is composed of data, metadata and standard APIs to access it. Any data-to-value business scenario is based on this fundamental concept to provide the right data in the right quality and format, easily accessible for data consumers.

‘The Data Product ABCs framework notes that a data product should answer the below at a minimum -

Accountability - e.g., "Who's responsible for this data?"

Boundaries - e.g., "What is the data?"

Contracts and Expectations - e.g., "What are the sharing agreements, consented uses, and policies?"

Downstream Consumers - e.g., "Who are the current consumers?"

Explicit Knowledge - e.g., "What is the meaning?"

Along with this, the data product should have Consistent and Usable Interface to the Data agreed upon by the stakeholders.

The organization, on the other hand, should complement this by having a Federated Data Governance Method which balances Centralization and Decentralization.

As is typical with SAP, it has come up with a set of technical usecases which leverage this data and then use the output of these Technical Use Cases as an input for Business Use Cases.

It is but natural that each use case has got an architecture to use thereby extending this as an complete solution.

Didn't we note that this is modelled on TOGAF?

The model is split into four Phases focussing on Data-to-Value Opportunities -

In Phase I the objectives and scope of the investigation is defined and the as-is situation is analysed to identify data-to-value opportunities or improvement potential.

Phase II and III is executed for each business outcome that describes the measurable result.

Phase II focusses on analysing the use cases related to the business outcome and define requirements for the data product and the solution architecture.

In Phase III, the consolidated solution requirements are mapped to the technical capabilities provided by the Data & Analytics Capability Model and aligned with potential software solutions. Also, the use case categories & patterns should be reviewed to check if related reference architectures, especially SAP BTP reference architectures fit. The results of these activities could be architecture options that need to be assessed and evaluated. The preferred architecture option could certainly be validated by a proof-of-concept to ensure feasibility.

Finally, Phase IV deals with the impact to organizational skills and data governance processes that might be affected. In a last step a timeline for implementation of the target architecture and organizational changes is created in the form of a roadmap (high level timeline) or a detailed project plan.

This framework seems to be a kind of extension towards better leveraging of SAP's Datasphere which is already integrated with the internal data. It looks as if the both - the Datasphere and the Framework are a consequence of the same thought process and can be a really good integration if it can be leveraged properly.

If you look at the expectations of the Analytical Model for Datasphere, one would see all that which is happening is aimed at developing a robust model.

Rich measure modelling: With calculation after aggregation, restricted measures & exception aggregation as well as the possibility to stack all of these, users can build very complex calculation models and even refine them in SAC stories

Careful design how analytics users see the data: modelers can curate which measures, attributes and associated dimensions to expose to users. This helps analytics users to see exactly the data that is relevant to them, reduces likelihood for errors & boosts performance

Collection of user input via prompts in SAP Analytics Cloud: these can be used for subsequent calculations, filters & time-dependency. Value helps are provided too, of course.

Rich previewing possibility: modelers can inspect the result of their modelling efforts in-place because the Data Analyzer of SAP Analytics Cloud is tightly embedded into the Analytic Model editor. So slice & dice, pivoting, filtering, hierarchy usage and many more features are available to help users understand the data how it’ll be presented for consumption

Time-dependency support: Analytic Models support this critical feature to let users travel back & forth in time while Lines of Business, structures & organizations are constantly evolving.

Dependency Management & Transport: Complex analytic projects require careful planning and a sophisticated toolset for managing the dependency and lifecycle of all modelling artefacts. The Analytic Model is fully integrated into the SAP Datasphere repository and thus benefits from impact & lineage analysis, change management & transport management

I am not really sure but this seems to be one of the first enterprise level Data-as-Value architecture proposed. But the real question I have over this is, is this model leading the market or chasing it - clearly, you are modelling your architecture based on what data you have and unless some standard usage patterns are identified, I am really curious how a new greenfield implementation can leverage it. There are use cases, though - like a major conglomerate venturing into a new business area where one would be curious as to how the model is designed. On the other hand, we have marriage of two separate data models during acquisitions and mergers.

References

Release of SAP Data and Analytics Advisory Methodology | SAP Blogs

Do You Know Your Data Product ABCs? | data.world

https://blogs.sap.com/2023/03/13/introducing-the-analytic-model-in-sap-datasphere/